Proxmox VE supports three types of virtualization technologies:

- Container virtualization (OpenVZ): it allows that a physical server can run multiple insolated operating system instances as well known as containers. Its main problem is these containers or guests can just be Linux instances. However, the OpenVZ kernel provides better performance (penalty between 1% and 3% compared to a standalone server) in contradistinction to other alternatives.

- Full virtualization (KVM): it can run Linux and Windows guests but unlike OpenVZ, Intel VT or AMD-V CPU is needed.

- Paravirtualization (KVM): it presents a software interface similar to the underlying hardware in order to try to reduce the execution time of certain operations.

In my personal opinion, I think that OpenVZ is a plus because it has got better performance than KVM and the containers idea is fantastic: the size of these templates is really small and you can get a ready virtual machine in few minutes.

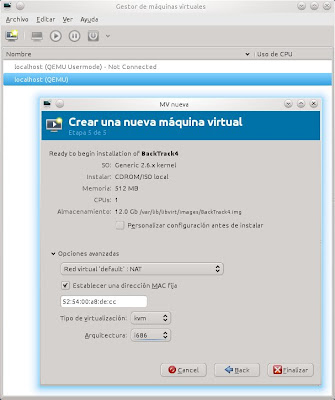

You can install Proxmox VE from an ISO image or directly on an existing Debian distribution (32 or 64 bits). I have tested Proxmox VE 1.7 (bare-metal ISO installer based on Debian Lenny) on a KVM virtual machine under my Kubuntu 10.10.

The process is very simple. The Proxmox installer is based on a graphical wizard with several stages: location and time zone selection, password and email address definition, and network configuration.

What are the main features of this product?

- Web based administration: easy deployment and management (web based management and virtual console, backup and restore with LVM2 snapshots, etc.).

- Virtual appliances: they are fully pre-installed and pre-configured applications including the operating system environment. You can create your own container, get from the community, use Linux OS instances or buy certified appliances.

- Proxmox VE cluster: it allows to gather multiple physical server in one VE cluster (central web management and login, cluster synchronization, easy cluster setup, live migration of virtual machines between physical servers, etc.).

When you finish the installation process, you must reboot the machine and update the system.

proxmox:~# aptitude update ; aptitude dist-upgradeIn order to manage Proxmox VE, you must open a web browser and type the IP address configured during the wizard (the default user is 'root').

The Proxmox web interface is very useful. It is formed by three principal sections, VM Manager, Configuration and Administration.

In the VM Manager area, you can upload ISO images and OpenVZ templates, download certified appliances and create and handle virtual machines.

In the Configuration area, you can set up the different parameters of the system (network, DNS, time, administrator options, language, proxy, etc.), add and manage data storages (ISCSI targets, NFS shares, LVM groups and directories) and create new backup jobs.

And finally, in the Administration area you can control the Proxmox VE certificates and services (ClusterSync, ClusterTunnel, NTP, SMTP, SSH and WWW), take a look at the logs and monitor the cluster nodes.